This time around, it is not a price manipulation vulnerability like in the last story, rather it is a combination of some low-risk vulnerabilities that paved the way to what could have been a data breach worthy of making the front page.

Client

Let’s call our client Flashy Holdings. Flashy Holdings are a large financial institution in their country with over 7000 employees at the time of writing. With just a large list of IP addresses provided to us, I began the black box testing.

“A black box penetration test requires no previous information and usually takes the approach of an uninformed attacker. In a black box penetration test the penetration tester has no previous information about the target system.

— SECFORCE

The benefit of this type of attack is that it simulates a very realistic scenario”

While there were many interesting vulnerabilities found on their web application, we are gonna be talking about just one of them, one critical vulnerability that quiet easily put their customer data at risk.

Vulnerability and Exploitation

Usually while pen-testing a web application, one of the things one does or I do is check out the website’s robots.txt which almost always has nothing interesting in it. But I still strictly do it like most people in this field, because you never know!

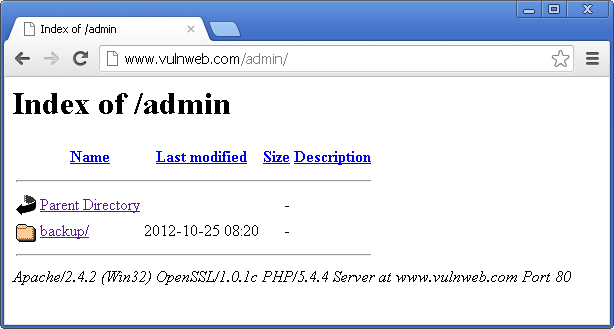

And rightly so, Flashy Holdings had a bunch of detailed paths in their robots.txt, that seemed to be pointing to source codes, backups and such. So naturally, I had to go through each of them and to my surprise, all of them had Directory Listing enabled.

But one entry or rather one directory particularly caught my interest. Upon accessing the directory, I found that they had about 16,000 PDF files all ready for me or rather for anyone to just open and read the sensitive customer information with the option to download as well. Imagine such information in the hands of an attacker!

What is Directory Listing?

A website becomes vulnerable to Directory Listing when its web server is configured to display the list of files contained in directories which has no index page present in them. These directories may contain sensitive files that are not normally exposed through links on the web site. This can aid an attacker to quickly identify the resources at a given path, and proceed directly to analyze and attack those resources.

It particularly increases the exposure of sensitive files within the directory that are not intended to be accessible to the public, such as source code, configuration files, temporary files, crash dumps, website backups or important documents related to the respective business, which in this case was the transaction details.

How to disable it?

Because there aren’t usually any good reasons to enable listings of directories, it is highly recommended to disable them by configuring the webserver to do the same for all directories and sub-directories in the webroot.

- Directory listing can be disabled by adding the following line to your .htaccess file:

Options -Indexes2. It is also recommended to create and place a default file (e.g. an empty index.html) in every directory with no index page present in them so that the webserver will display it instead of returning a directory listing.

3. As for the robots.txt configuration, it is recommended to remove directory paths that could reveal sensitive information and place a .htaccess file inside the relevant folders with the following code:

<FilesMatch ".(doc|pdf|[FILE_TYPE]|[FILE_TYPE])$">

Header set X-Robots-Tag "noindex, noarchive, nosnippet"

</FilesMatch>

Wrap Up

There is no denying the fact that the risk associated was critical, considering the number of customers affected and the size and place of Flashy Holdings in the industry.

We immediately notified Flashy Holdings of the issue and advised them on how to fix it. Following this, they quickly took the links off the robots.txt file and made the files and the folders inaccessible. While there weren’t a lot of steps involved in finding this issue, the fact alone makes this something for developers to keep a note of, something simple one could miss.

Conclusion

To be honest, it’s unbelievable the number of websites I have found Directory Listing enabled on during my pen-test sessions. More so when its found on sites like Flashy Holdings’ where it affects thousands of their customers’ sensitive information.

All the more a reason it was educational for me from a Developer’s perspective as well as from a Security Analyst’s during this initial phase of my career in Cybersecurity. It could be the same for someone else too.

About this particular case, finding and reporting such an easy yet critical vulnerability was something I enjoyed because Astra’s efforts saved one of our biggest client’s from what could have been a large breach, had the information been obtained by an attacker.

If you ever need a security audit done on your web application or want to make sure that such vulnerabilities don’t end up resulting in a large data breach, you can always come talk to us at Astra.

Hope you enjoyed the short read!

You can follow me on Medium for more stories about the various Security Audits I do and the crazy vulnerabilities I find, as well as on Twitter for more Cybersecurity related news.