Introduction

The world of artificial intelligence (AI) has seen rapid advancements, with one of the most remarkable developments being large language models (LLMs) like OpenAI’s ChatGPT. These models have shown incredible capabilities, from generating human-like text to writing code and even solving problems. But how exactly were they created, how do they work, and what distinguishes their abilities from human intelligence?

Think of a language model like an incredibly advanced autocomplete engine trained on the internet’s collective knowledge. This analogy helps highlight the core mechanism of how these models generate responses based on learned patterns, not understanding.

In this article, we’ll break down how LLMs like ChatGPT are trained, their capabilities, and what sets them apart from human intelligence. We’ll also discuss the challenges they face, their potential future, and how they can be improved for more useful applications.

How Were Large Language Models Like ChatGPT Created?

Creating models like ChatGPT involves several intricate steps that blend computer science, linguistics, and massive computational resources. The creation process can be broken down into the following stages:

- Collecting Data

The foundation of an LLM starts with massive datasets. These datasets consist of text from books, websites, articles, forums, social media, and even code repositories. The goal is to expose the model to a wide variety of language patterns, topics, and contexts.

For example, ChatGPT has been trained on datasets like Common Crawl, Wikipedia, and other publicly available texts, containing hundreds of billions of words. This enables the model to understand a broad range of language patterns, topics, and contexts. - Data Cleaning and Preparation

After gathering raw data, the next step is cleaning it up. This involves filtering out irrelevant, biased, or harmful content, as well as ensuring the data is structured in a way that can be fed into the model. This process helps the model focus on useful and accurate information during training. - Tokenization

Before training, text needs to be converted into a format that the machine can understand. This is done through a process called tokenization, where sentences are split into smaller units like words or subwords (tokens). For example, the word ‘information’ might be tokenized into [‘in’, ‘formation’] or a different set of subword tokens, depending on the model’s approach. This helps the model understand parts of words and their relationships to other words in context.

These tokens are then converted into numerical representations (vectors), which the model can work with. - Pretraining the Model

The model is then pre-trained on the tokenized data. During this phase, it learns to predict the next token in a sequence. For instance, if the input is “The sky is,” the model will learn to predict the next word as “blue.”

The pretraining phase uses neural networks, specifically a transformer architecture, to process this vast amount of data, learning complex relationships between tokens. For instance, GPT-3, one of the largest models to date, has 175 billion parameters that help it model these relationships.

How Do Large Language Models Like ChatGPT Work?

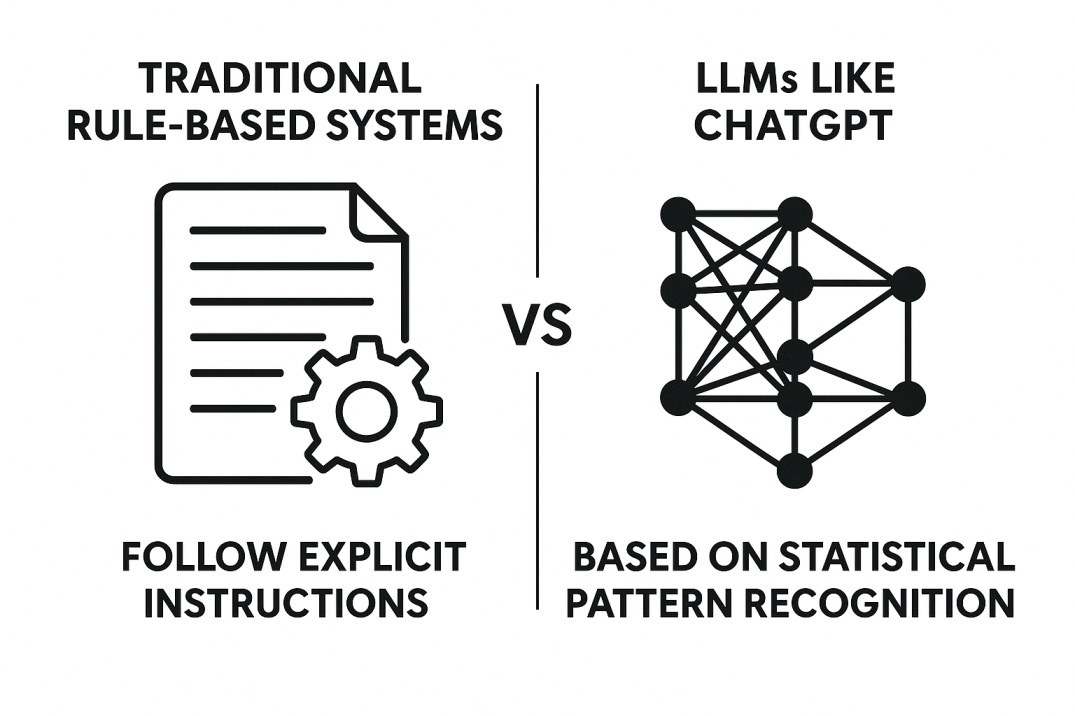

Once trained, ChatGPT can generate text and perform tasks based on the patterns it has learned. But unlike traditional rule-based systems, which follow explicit instructions, LLMs like ChatGPT are based on statistical pattern recognition.

Understanding the Transformer Model

The core architecture behind LLMs is the transformer model, introduced in the paper “Attention is All You Need” by Vaswani et al. in 2017. This architecture revolutionized Natural Language Processing (NLP) by enabling parallel processing of language sequences, replacing older sequential models like RNNs and LSTMs.

The transformer uses an attention mechanism, which allows the model to weigh the importance of different words in a sentence, regardless of their position. This enables the model to better understand context and relationships between words.

Example:

- Sentence 1: “I ate an apple.”

- Sentence 2: “The apple was delicious.”

In Sentence 1, “ate” is linked to “apple,” and in Sentence 2, “apple” is linked to “delicious.” The attention mechanism helps the model understand that “apple” is a key word in both sentences and how it relates to other words.

Generating Responses

When a user inputs a prompt, the model generates a response by predicting the most likely next token based on the context of the conversation. It does this by looking at patterns it has learned and outputting the next most probable word or phrase.

For example, if asked, “What’s the capital of France?”, ChatGPT will recognize that this question often leads to the answer “Paris” because it has seen similar examples during training.

Fine-tuning and Reinforcement Learning

While pretraining teaches the model general language patterns using a massive dataset, fine-tuning narrows its behavior by training it on curated tasks or aligning it with human feedback.

This fine-tuning process uses techniques like Reinforcement Learning from Human Feedback (RLHF), where human evaluators rank responses based on usefulness and quality. Fine-tuning may also involve training on domain-specific datasets, such as medical texts or code snippets, to enhance the model’s performance in specific tasks. The model is then adjusted to generate better answers based on this feedback.

LLMs like ChatGPT also receive periodic updates from developers to refine behavior, incorporate safety improvements, or expand capabilities.

What Makes ChatGPT Different from Human Intelligence?

At first glance, ChatGPT might seem intelligent; after all, it can answer questions, write essays, and solve problems. However, there are fundamental differences between LLMs and human intelligence:

1. Lack of True Understanding

Humans understand context, emotions, and intention. ChatGPT, on the other hand, does not. It does not have a “mind” or subjective experience. While it can generate text that appears thoughtful, it does not truly understand what it is saying. For instance, if you ask it about the meaning of life, it can give a well-articulated response, but it has no genuine comprehension of the concept.

2. No Reasoning or Consciousness

Humans reason through problems, form new ideas, and reflect on past experiences. ChatGPT doesn’t have this ability. It doesn’t know the meaning behind a sentence or the consequences of an action. It merely generates responses based on learned patterns. If you ask it to solve a complex, novel problem, it might give an answer based on patterns but without genuine insight.

LLMs also struggle with tasks that require real-time sensory input, spatial awareness, or interaction with the physical world – all essential elements of general intelligence.

3. Limited Creativity and Original Thought

Humans are capable of original thought and creativity. While ChatGPT can generate new text based on its training, it cannot truly innovate. It can’t come up with entirely new ideas in the way that humans can. Instead, it mixes and reuses the vast amounts of data it has already been exposed to.

[Table] Human Intelligence vs ChatGPT (LLM Capabilities)

| Aspect | Human Intelligence | ChatGPT (LLM) |

|---|---|---|

| Understanding | Deep, contextual, emotional | Pattern-based prediction, no real understanding |

| Learning | Experience-based, dynamic | Trained once; does not adapt from new experiences after deployment. |

| Reasoning | Logical, abstract, adaptable | Mimics reasoning via observed patterns |

| Memory | Selective, long-term and short-term | No memory unless specifically integrated |

| Creativity | Original, intentional, emotional | Derivative, remixing prior data |

| Consciousness | Aware, subjective experience | None |

| Goal Orientation | Has desires, goals, motives | Has no intent or purpose |

Applications of Large Language Models Like ChatGPT

Despite their limitations, LLMs have proven incredibly useful in various fields:

Customer Support

Chatbots powered by LLMs are used by companies like banks and airlines to handle customer queries, saving time and costs by automating responses to common questions.

Code Generation and Debugging

Developers use LLMs like GitHub Copilot to suggest code completions and help debug errors in real-time.

Content Creation

LLMs can assist writers by drafting articles, brainstorming ideas, or generating social media posts.

Education

AI-powered tutoring systems like Khan Academy’s chatbot use LLMs to explain math problems step-by-step, helping students understand complex concepts.

Challenges and Ethical Considerations

LLMs like ChatGPT are powerful, but they come with significant challenges:

Bias and Fairness

Since the model learns from human data, it can inherit biases present in the data, leading to biased responses. Efforts are underway to mitigate biases, such as through adversarial training and other debiasing techniques, but these issues remain a challenge.

Misinformation

Without true understanding, LLMs might generate incorrect or misleading information that seems plausible. For instance, ChatGPT may produce text that sounds plausible but is factually incorrect, such as generating outdated information or fabricating sources. These models can also hallucinate, generating plausible-sounding but entirely fabricated facts or sources, especially when confident-sounding output is prioritized over factual accuracy.

Privacy Concerns

Large models may inadvertently generate sensitive information they’ve been trained on, leading to privacy issues.

Conclusion: The Future of LLMs and Human-like AI

The development of LLMs like ChatGPT marks a significant milestone in the world of artificial intelligence. These models showcase the incredible potential of statistical learning and pattern recognition. However, they are still far from achieving true human-like intelligence, as they lack understanding, reasoning, and consciousness.

As we continue to improve LLMs, the future holds exciting potential. With better alignment, reduced biases, and greater contextual awareness, LLMs could revolutionize industries ranging from healthcare to entertainment, while still falling short of true human-like intelligence. Future models are also moving beyond language to combine text, images, and even video understanding in a single system.

The ongoing integration of language models into everyday tools signals a future where AI augments human capability, if developed and deployed responsibly.